Thursday, December 30, 2010

Friday, October 22, 2010

SSIS DB2 and Stored Procedures

The Scenario

Extracting data from a DB2 file using SSIS when the data is accessed through a stored procedure.

With DTS packages this could be done by using a ODBC driver to connect to the DB2.

The Problems

With DTS packages there was an option to choose Other Driver. From this I could set up an ODBC connection to a DB2 database and execute a stored procedure – this acted as my data source. This ODBC connection used a system DSN which pointed at eh db2 database on an AS400 server.

SSIS does not give you the option of using other driver. What they have instead are OLE DB drivers or .Net drivers.

The OLE DB drivers will not allow the execution of a stored proc on the DB2 to retrieve data.

The IBM OLE DB DB2 provider and Microsoft OLE DB DB2 provider do not support using an SQL command that calls a stored procedure. When this kind of command is used, the OLE DB source cannot create the column metadata and, as a result, the data flow components that follow the OLE DB source in the data flow have no column data available and the execution of the data flow fails..

See: http://msdn.microsoft.com/en-us/library/ms141696.aspx

The Dot.Net connection, in SSIS 2008, will allow you to execute the procedure in an Execute Script Task. It will not allow execution in a data flow task – although it will allow you to preview the data in data flow.

After setting up a Linked Server to the DB2 I encountered the same problem – although the error message was slightly misleading and I spent a long time investigating permissions.

So the only options now are to carry out a row by row import by using an Execute TSQL Task to populate a record set object and then rolling through this to import the data or asking the DB2 DBA to use the stored procedures to populate file which I can then import from.

My forum question on MSDN relating to the issue:

http://social.msdn.microsoft.com/Forums/en-US/sqlintegrationservices/thread/7ea40a98-c930-48fc-a961-2fdb977de0d3/#e2edc306-f0f4-45bb-9844-53cf83fb845a

Connect issue raised with Microsoft:

https://connect.microsoft.com/SQLServer/feedback/details/615901/ssis-db2-and-stored-procedures

Please follow the link and vote if the issue effects you and maybe Microsoft will bring out a new driver.

Thursday, October 21, 2010

Importing Large Amounts of Data

The Problem

I have a system which imports large amounts of data over night. This will normally be in the low millions. If it fails for a few nights it can then be tens of millions. The problems is space – I don't have enough drive space to allow the transaction log to grow large enough to accommodate more than two million rows of data.

The Solution

Create a variable called @loopRowCounter.

Create Another called @loopCount

Use an execute TSQL task to populate that variable with the number of rows in the data source.

You can do this by:

Under General

SQL command should start like this - SELECT count(*) as loopRowCount FROM …

Set the ResultSet to single row

Under Result Set

Set result name to loopRowCount and set it to match your variable - User::loopRowCounter.

Now you have a variable with the number of rows in.

Move on to a For Loop.

In InitExpressions enter this @loopCount = 2000002 – this is to set a default value

In EvalExpression enter this @loopCount > 0

In asignExpression enter @loopCount = @loopRowCounter – inside the container @loopRowCounter will decremented.

Now use a data flow and in the Data source use Select Top (500000) …

You will need to do a check that the rows in the source are not in the destination – I do a join on the table and compare ids

This will limit the flow of data to 500000 rows.

Next got to an Execute SQL Task

Under Parameter Mapping

Select the variable Name User::loopRowCounter give the parameter a name and set it to type Long with a size of 4

Under General

SQL command should start like this:

checkpoint;

SELECT ? - 500000 as loopRowCount

Set the ResultSet to single row

Under Result Set

Set result name to loopRowCount and set it to match your variable - User::loopRowCounter.

We know how may rows were in the table and we assigned that value to User::loopRowCounter. We know we have just move 500000 out so we subtract 500000 from the parameter which represents User::loopRowCounter and the result is then assigned back to User::loopRowCounter.

Now, no matter how many millions of records go through my log file only has to cope with 500000 at a time.

Sunday, October 3, 2010

Rename a SQL Server

Working with VMs a server can be cloned with SQL Server installed. The server is renamed but SQL server maintains its original name.

Run this script in SSMS:

sp_dropserver 'old server name'

sp_addserver 'new server name', 'local'

Restart the SQL Server service then test the rename worked:

Select @@servername;

Make sure the SQL Agent is running – it will stop when the sql service stops. If you restart sql server service through the SQL configuration control panel it should restart the sql agent for you.

Thursday, September 2, 2010

Date Parameters appear in US Format - SSRS Reports

In reporting services a new report is created. The report region/Language is set to English(UK). A date parameter is created.

When the report is run the date parameters are showing up in mm/dd/yyyy format

The Fix

A parameter will take a label and a value field.

Change your query to have a label formatted in the way you like and the value left raw:

Select Right('0' + Cast(Day(dateRange) as Varchar(2)),2) +'-'+ Right('0' + Cast(Month(dateRange)as Varchar(2)),2) + '-'+ cast(Year(dateRange) as char(4)) as label, dateRange as value

FROM dbo.vwDateRange

Order by dateRange desc

Where daterange is a smalldatetime

Set the report parameters to display label as the label and value as value

Thursday, August 19, 2010

Replication: Distributor not set up correctly

The Distributor has not been installed correctly. Could not enable database for publishing

To fix this I ran these three scripts.

sp_adddistributor 'NameofDistributorServer';

sp_adddistributiondb N'NameofDatabaseBeingReplicated';

sp_adddistpublisher @publisher = 'NameofDistributorServer', @distribution_db = N'NameofDatabaseBeingReplicated';

This then allowed me to run:

use master;

exec sp_replicationdboption N'NameofDatabaseBeingReplicated ', N'publish', N'true'

Monday, August 2, 2010

70-442

I liked this exam although I felt there was a little too much XML and cursors and not enough TSQL. The 70-441 exam felt a bit false and strained - I am going to word this question so that you have to pick one of the new technologies rather than solving a problem.

Well that's that for now, I'll do the 2008 upgrade next year. I will probably go for the 2008 DBA upgrade this year. This year I am planning to take the 70-445 exam: TS: Microsoft SQL Server 2005 Business Intelligence - Development and Maintenance. Then I need the two windows admin exams to finally complete the SLQ 2000 DBA exams. I have been putting those off as I do so little with Windows outside of SQL server and a bit with Active Directory.

Pivot or Sum

Here are the two queries:

Select [Reviewed] + [Logged] as [Queue], [Open] As Development, [Testing], [Pending Imp],

[Open] + [Testing] + [Pending Imp] as [Total In Progress],

[Reviewed] + [Logged] + [Open] + [Testing] + [Pending Imp] as [Grand Total]

from (Select [state] FROM dbo.tblCombinedSnapshto) Datatable

Pivot

(

Count([state])

For [state]

In ([Reviewed], [Logged], [Open], [Testing], [Pending Imp])

) Pivottable;

Select Sum(case when [state] = 'Reviewed' or [state] = 'Logged' then 1 else 0 end) as [Queue],

Sum(case when [state] = 'Open' then 1 else 0 end) as Development,

Sum(case when [state] = 'Testing' then 1 else 0 end) as Testing,

Sum(case when [state] = 'Pending Imp' then 1 else 0 end) as [Pending Imp],

Sum(case when [state] = 'Open' or[state] = 'Testing'

or [state] = 'Pending Imp' then 1 else 0 end) as [Total In Progress],

Count(*) as [Grand Total]

from dbo.tblCombinedSnapshto;

The results were:

PIVOT timing

SQL Server Execution Times:

CPU time = 0 ms, elapsed time = 38 ms.

IO Table 'tblCombinedSnapshto'. Scan count 1, logical reads 8, physical reads 1, read-ahead reads 48, lob logical reads 0, lob physical reads 0, lob read-ahead reads 0.

2000 timing

SQL Server Execution Times:

CPU time = 0 ms, elapsed time = 50 ms.

IO Table 'tblCombinedSnapshto'. Scan count 1, logical reads 8, physical reads 1, read-ahead reads 48, lob logical reads 0, lob physical reads 0, lob read-ahead reads 0.

So for disk usage they were equal. For speed Pivot wins out.

Only a small scale test but worth doing now and then.

Monday, May 10, 2010

IsNull and Dates

DECLARE @x as datetime;

set @x = NULL;

SELECT IsNull(@x,-1) AS Col_1, IsNull(NULL,-1) AS Col_2;

The actual result was 1899-12-31 00:00:00.000,-1

Why did it happen?

The reason it does this is because dates can be given INT values where 0 = 1900-01-01

All other dates are relative to that so:

1 = 1900-01-02

-1 = 1899-12-31

SELECT IsNull(@x,-1) AS Col_1;

Is the same as set @x = -1;

Which is the same as

set @x = '1899-12-31 00:00:00.000';

So it did assign the value -1 to the date which then translated to Dec 31st 1899

Saturday, May 8, 2010

SSIS: Configuration

Nearly everything in the package can be configured.

The main items you will want to configure are the passwords in server connections along with the name of the server and possible the initial catalog (database).

To set up a configuration option right click the package main window and select package configurations.

The options available are to use an XML file, Registry Entry, Parent Package Variable or a SQL database.

In this example I have a database called dataimports which I am going to use. In dataimports I have created a schema for each batch of work I use. This means I can separate out all the SSIS logging and configuration and I can give users access through the schemas.

Once you select the SQL server option you will need to select a database connection to use and then a table. If you already have config tables the drop down box will list them – else you can create a new one. You will need to type in a configuration filter. This is a key word that will match up the configuration settings in the table with this package. The name should be easily identifiably like >> DataDownload.

When you click next you will see a list of configurable items. If this is a new package there will not be very many.

You should try to restrict the configured values to the ones really needed else you will make reading the configuration table difficult which can lead to errors.

A config file should be used to store secure data – like passwords. It should also be used to store values which will or could change. For example a package which emails a user upon completion could have the To field of the email task configured. This way if you need to change the recipient you can change the config table. If a server name is going to change as the package is deployed then store the server name in a config file. You shouldn’t store static or trivial data like the protection level of a database connection – this will probably never change; or the connection string to a database and then all the individual elements of the connection. If you are using Windows Authentication you may not need to store any connection data.

What it looks like in the table:

I have created a primary key on ConfigurationFeature and PackagePath – the table is created without any primary key.

Note for passwords the value stored in the config file will be *****. This is a place holder. You will need to change this value to the actual password and set security on the table to stop people reading it.

Wednesday, May 5, 2010

Jobs failing with the error – job can not start as it is suspended

When this happens you need to run this script:

use msdb;

delete from msdb.dbo.syssubsystems;

exec msdb.dbo.sp_verify_subsystems 1;

go

Now restart the SQL Agent

Reference

http://support.microsoft.com/?kbid=914171

Properties and other dialogs failing in SSMS

TITLE: Microsoft SQL Server Management Studio

------------------------------

Cannot show requested dialog.

------------------------------

ADDITIONAL INFORMATION:

Cannot show requested dialog. (SqlMgmt)

------------------------------

There is no row at position 0. (System.Data)

Problem is to do with system table spt_values being deleted.

Run this script to repair - the location may be different on your server.

C:\Program Files\Microsoft SQL Server\MSSQL\MSSQL.1\install\u_tables.sql

Remove a File and Filegroup from Database

Before a filegroup can be removed all files associated with it need to be deleted.

Before a file can be deleted all objects associated with it need to be removed.

Just removing a file not a filegroup

If you have multiple files on a filegroup and you just want to get rid of one you can run this command.

DBCC ShrinkFile(logical_file_name,EmptyFile);

This will move any objects in the file onto the other files in the filegroup. You can then delete the file.

ALTER DATABASE DatabaseName REMOVE FILE logical_file_name;

Removing a file and a filegroup

To do this you will have to make sure that here are no objects associated with the file group. This could be Tables etc, Indexes, or even Blob objects.

This bit of code will show you the objects on a file group.

SELECT o.[name], o.[type], i.[name], i.[index_id], f.[name]

FROM sys.indexes i

INNER JOIN sys.filegroups f

ON i.data_space_id = f.data_space_id

INNER JOIN sys.all_objects o

ON i.[object_id] = o.[object_id]

WHERE i.data_space_id = f.data_space_id

AND o.type = 'U' -- User Created Tables

I got this from:

http://blog.sqlauthority.com/2009/06/01/sql-server-list-all-objects-created-on-all-filegroups-in-database/

If there are objects you want to keep you will need to move them to another filegroup. To move a non-clustered index you will need to drop and recreate it on the new file group. To move a table you need to drop and recreate the clustered index.

Once the filegroup has no user objects empty you can run the same commands for removing a file as – shrinkfile and remove file.

Then you will need to run the code below to drop the filegroup:

DATABASE [databaseName] REMOVE FILEGROUP [Filegroupname];

Remember: you can not drop the Primary filegroup.

Friday, April 30, 2010

Table Diff: Compare the contents of two tables

Table diff will let you compare two tables and generate a change script to sync them up.

By default it is installed here:

"C:\Program Files\Microsoft SQL Server\90\COM\tablediff.exe"

In this example I am comparing two tables in the same database called Live_Support. The tables are LF29699_Orig and LF29699_New.

I have created a batch file called comapretables.cmd.

The contents of the table looks like this:

"C:\Program Files\Microsoft SQL Server\90\COM\tablediff.exe" -sourceserver sqlmgmt1 -sourcedatabase Live_Support -sourcetable LF29699_Orig -destinationserver sqlmgmt1 -destinationdatabase colt_Live_Support -destinationtable LF29699_new -f c:\LF29699_diff.sql

Execute this and you get a file c:\LF29699_diff.sql which is a change script for that table:

-- Host: sqlmgmt1

-- Database: [Live_Support]

-- Table: [dbo].[LF29699_new]

SET IDENTITY_INSERT [dbo].[LF29699_new] ON

UPDATE [dbo].[LF29699_new] SET [GroupIndex]=3 WHERE [pkid] = 3

UPDATE [dbo].[LF29699_new] SET [GroupIndex]=2 WHERE [pkid] = 683

SET IDENTITY_INSERT [dbo].[LF29699_new] OFF

You can see from the script that there are 2 differences between the tables. It does not tell you what the values in the table are currently; you do have the primary key value though so you can look it up.

The two tables you compare will need to have primary keys defined on them.

BOL tableDiff.Exe: 'http://msdn.microsoft.com/en-us/library/ms162843.aspx

Friday, April 23, 2010

Replication Error - Login Failure

This will show up in the SQL Error Logs as:

Login failed for domain\username

Followed by

Error: 18456, Severity: 14, State: 6

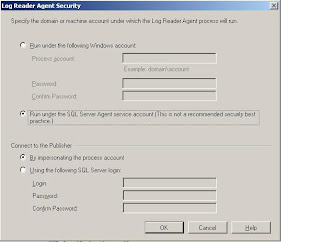

The error code means that a windows log-in was entered in the place of a SQL log-in. In the case I had it was in the Log Agent Reader security.

In the section connect to publisher I had entered a windows service account (although it says quite clearly here: using the following SQL Server Login). I didn’t see this though as I added replication through a script which I had adapted.

Monday, April 19, 2010

SQL Cursors

I had thought that if an item came within teh scope of a cursor it would remain there until removed. However, cursors work a little like views, if you cange a value which would remove an item from a view you will no longer see it in the view. If you change the value of a data row which would put it outside the scope of teh cursor the cursor will drop that row.

This was tested on SQL 2005 sp3 – I assume it will be the same on other versions but I haven’t tested them.

I have a simple table:

CREATE TABLE [dbo].[test](

[pkid] [int] IDENTITY(1,1) NOT NULL,

[descript] [varchar](5) NOT NULL,

[value1] [int] NOT NULL,

[value2] [int] NOT NULL,

CONSTRAINT [PK_test] PRIMARY KEY CLUSTERED

(

[pkid] ASC

);

The table has 3 rows:

| Pkid | descript | value1 | value2 |

| 1 | test1 | 0 | 0 |

| 2 | test2 | 0 | 0 |

| 3 | test1 | 0 | 0 |

I then execute this script against the table:

Declare @dbName as varchar(5);

Declare @x as int;

Set @x = 1;

DECLARE dbCursor CURSOR Local Fast_Forward FOR

select descript From dbo.Test

WHERE value2 = 0;

OPEN dbCursor

FETCH NEXT FROM dbCursor INTO @dbName

WHILE @@FETCH_STATUS = 0

BEGIN

Print @dbName

Update dbo.Test

Set value2 = @x

Where descript = @dbname;

Set @x = @x+1;

FETCH NEXT FROM dbCursor INTO @dbName;

END

CLOSE dbCursor;

DEALLOCATE dbCursor;

The results in the table are:

| Pkid | descript | value1 | value2 |

| 1 | test1 | 0 | 1 |

| 2 | test2 | 0 | 2 |

| 3 | test1 | 0 | 1 |

This shows that because the value2 value of pkid 3 changed from 0 to 1 on the first update it dropped out of the cursors scope.

Tuesday, March 23, 2010

Moving System Databases: SQL Server 2000 and 2005

Notes

Tempdb is built when ever the sql server restarts so you tell it where you want the database then restart sql and it will build the database there. You will then need to delete the old files.

In SQL 2005 the resource database log and data file have to go into the same directory as the master data file.

sp_helpfile will show you where the database files are – good to run after the change to check they are where you think they are.

Move Tempdb

Run the script below using the correct path for the server:

USE master;

GO

ALTER DATABASE tempdb

MODIFY FILE (NAME = templog, FILENAME = 'F:\SQLLogs\templog.ldf');

GO

ALTER DATABASE tempdb

MODIFY FILE (NAME = tempdev, FILENAME = 'E:\SQLData\tempdb.mdf');

GO

For this next step you can wait and do the stop /start for msdb

Next stop Sql Server

Restart SQL Server

Go back and delete the old files

Move Model and MSDB Files

First run this – with the filename being the path required

ALTER DATABASE Model MODIFY FILE (NAME = modellog, FILENAME = 'F:\SQLLogs\modellog.ldf');

Go

ALTER DATABASE MSDB MODIFY FILE (NAME = MSDBLog, FILENAME = 'F:\SQLLogs\msdblog.ldf');

Go

ALTER DATABASE Model

MODIFY FILE (NAME = modeldev, FILENAME = 'E:\SQLData\model.mdf');

Go

ALTER DATABASE MSDB

MODIFY FILE (NAME = MSDBData, FILENAME = 'E:\SQLData\msdbdata.mdf');

go

Stop Sql Server

Move the files

Restart SQL Server

Move Master and Resource Log

1. From the Start menu, point to All Programs, point to Microsoft SQL Server 2005, point to Configuration Tools, and then click SQL Server Configuration Manager.

2. In the SQL Server 2005 Services node, right-click the instance of SQL Server (for example, SQL Server (MSSQLSERVER)) and choose Properties.

3. In the SQL Server (instance_name) Properties dialog box, click the Advanced tab.

4. Edit the Startup Parameters values to point to the planned location for the master database data and log files, and click OK. Moving the error log file is optional.

The parameter value for the data file must follow the -d parameter and the value for the log file must follow the -l parameter.

5. Stop the instance of SQL Server by right-clicking the instance name and choosing Stop.

6. Move the master.mdf and mastlog.ldf files to the new location.

7. Start the instance of SQL Server in master-only recovery mode by entering one of the following commands at the command prompt. The parameters specified in these commands are case sensitive. The commands fail when the parameters are not specified as shown.

1. For the default (MSSQLSERVER) instance, run the following command.

NET START MSSQLSERVER /f /T3608

2. For a named instance, run the following command.

NET START MSSQL$instancename /f /T3608

8. Using sqlcmd commands or SQL Server Management Studio, run the following statements. Change the FILENAME path to match the new location of the master data file. Do not change the name of the database or the file names.

Save the script into a file called c:\move.sql (make sure the paths are right for your server NOTE the ldf and mdf go to the same location as the master mdf

ALTER DATABASE mssqlsystemresource MODIFY FILE (NAME=log, FILENAME=

'E:\SQLData\mssqlsystemresource.ldf');

ALTER DATABASE mssqlsystemresource MODIFY FILE (NAME=data, FILENAME=

'E:\SQLData\mssqlsystemresource.mdf');

Now run sqlcmd

"C:\Program Files\Microsoft SQL Server\90\tools\binn\SQLCMD.EXE" -E -S . -d master /i c:\move.sql

1. Move the files to the new location.

2. Set the Resource database to read-only by running the following statement.

ALTER DATABASE mssqlsystemresource SET READ_ONLY;

1. Stop the instance of SQL Server.

2. Restart the instance of SQL Server using the sqlcmd this time without the flags /f /t3608

3. Check file and log locations:

SELECT name, physical_name AS CurrentLocation, state_desc

FROM sys.master_files

Moving System Databases in SQL Server 2000

Moving the Master Database

The location of the master database and its associated log can be changed from within SQL Server Enterprise Manager. To do this:

Open SQL Enterprise Manger and drill down to the proper database server.

Right-click the SQL Server in Enterprise Manager and click Properties.

Click the Startup Parameters button and you will see something similar to the following entries:

-dC:\MSSQL\data\master.mdf

-eC:\MSSQL\log\ErrorLog

-lC:\MSSQL\data\mastlog.ldf

Change these values as follows:

Remove the current entries for the Master.mdf and Mastlog.ldf files.

Add new entries specifying the new location:

-dE:\SQLData\master.mdf

-lL:\SQLLogs\mastlog.ldf

Stop SQL Server.

Copy the Master.mdf and Mastlog.ldf files to the new locations

Restart SQL Server.

Moving MSDB and Model

When you are using this procedure to move the msdb and model databases, the order of reattachment must be model first and then msdb.

To move the MSDB follow these steps:

In SQL Server Enterprise Manager, right-click the server name and click Properties. On the General tab, click Startup Parameters.

Add a new parameter as -T3608. Select OK to close the Startup Parameters and the Properties page. You will not be able to access any user databases at this time. You should not perform any operations other than the steps below while using this trace flag.

Drill down to the msdb database and then right click on it. Select Properties and then select the Options tab.

Select Restrict access and then Single User. Close the Properties sheet by selecting OK.

Stop and then restart SQL Server.

Open SQL Query Analyzer and then detach the msdb database using the following commands:

use master

go

sp_detach_db 'msdb'

go

Move the Msdbdata.mdf and Msdblog.ldf files from the current location to the new location.

Reattach the MSDB database as using the following commands:

use master

go

sp_attach_db 'msdb','E:\SQLData\msdbdata.mdf','L:\SQLLogs\msdblog.ldf';

go

Remove the -T3608 trace flag from the Startup Parameters box in the SQL Enterprise Manager.

Stop and then restart SQL Server.

Moving Tempdb

*** This is the same as the SQL 2005 method ***

Run the script below using the correct path for the server:

USE master;

GO

ALTER DATABASE tempdb

MODIFY FILE (NAME = templog, FILENAME = 'F:\SQL_Logs\templog.ldf');

GO

ALTER DATABASE tempdb

MODIFY FILE (NAME = tempdev, FILENAME = 'E:\SQL_Data\tempdb.mdf');

GO

Next stop Sql Server

Restart SQL Server

Go back and delete the old files

Monday, March 15, 2010

Replication failed between two 2000 machines: server execution failed

The error that was showing stated: server execution failed

Ran the snapshot replication agent from the command line.

To do this go to the sql folder with snapshot.exe In this case C:\Program Files\Microsoft SQL Server\80\COM

Then run snapshot.exe followed by the string found in the second step of the start agent job. In this case: -Publisher [Server2Name] -PublisherDB [replictedDatabaseName] -Distributor [Server2Name] -Publication [nameOfPublication] -DistributorSecurityMode 1

This then generated the real error: ATL71.Dll was missing from – series of directories all defined by PATH.

I did a search and found this dll in the temp directory. I copied it to system32 and re ran the agents.

They are now distributing transactions. It took a while to catch back up again though.

Wednesday, February 3, 2010

Error when using linked server

------------------------------

Failed to retrieve data for this request. (Microsoft.SqlServer.SmoEnum)

For help, click: http://go.microsoft.com/fwlink?ProdName=Microsoft+SQL+Server&LinkId=20476

------------------------------

ADDITIONAL INFORMATION:

An exception occurred while executing a Transact-SQL statement or batch. (Microsoft.SqlServer.ConnectionInfo)

------------------------------

The EXECUTE permission was denied on the object 'xp_prop_oledb_provider', database 'mssqlsystemresource', schema 'sys'. (Microsoft SQL Server, Error: 229)

For help, click: http://go.microsoft.com/fwlink?ProdName=Microsoft+SQL+Server&ProdVer=09.00.4266&EvtSrc=MSSQLServer&EvtID=229&LinkId=20476

FIX

GRANT EXECUTE ON xp_prop_oledb_provider TO [UserDomain\Account Name];

Error when using dtutil to deploy SSIS packages

Had to give the user's account access to msdb and the dts_admin and dts_operator roles.

Monday, January 25, 2010

SSMS short cuts

SSMS allows you to create your own short cut keys:

Under the Menu Tools Select Options

Under Environment >> Keyboard there is a list of shortcut commands.

A Couple are pre-populated Alt_F1 sp_help; Ctrl+1 sp_who; Ctrl+2 sp_lock;

I have added:

Ctrl+F1: master..xp_fixedDrives; Shows how much space is available on the fixed drives

Ctrl+3: Select * from sys.databases; Lists all databases

Ctrl+4: SELECT name AS 'File Name' , physical_name AS 'Physical Name', size/128 AS 'Total Size in MB', size/128.0 - CAST(FILEPROPERTY(name, 'SpaceUsed') AS int)/128.0 AS 'Available Space In MB' FROM sys.database_files; Shows how much space is available in SQL Data files

Ctrl + 5: DBCC SQLPERF(logspace); shows log files, the size of the file and % free space.

I have since added a couple more. One shows server waits and another shows server activity in a bit more details than sp_who2. I think I'll keep these as floating short cuts and change them depending on what I am working on or investigating.